IIT Delhi

NAVI - Navigation Assistant for Visually Impaired

Prof. Rohan Paul gave me a problem that seemed straightforward at first: indoor navigation for visually impaired people. When I started digging into it, I realized Google Maps works great outdoors, but stops at building entrances. That gap between outdoor and indoor navigation became the focus of my internship.

Timeline

June 2024 – August 2024

Role

Research Intern

Prof. Rohan Paul

Tools

Flutter

Node.js

AWS

C++

LLMs

Disciplines

Mobile Development

Backend Development

User Research

Hardware Integration

Accessibility Design

The NAVI Journey

From identifying a critical accessibility gap to building a complete navigation system that evolved from mobile app to smart glasses, this project demonstrates how user-centered research and iterative development can create meaningful technological solutions for real-world problems.

Understanding the Problem

Prof. Rohan Paul presented the challenge: indoor navigation for visually impaired people. While Google Maps works well outdoors, it stops at building entrances. Existing solutions like guide rails are expensive and not scalable. The gap between outdoor GPS and indoor navigation needed a technology-based solution that could work anywhere.

Building the Mobile App Solution

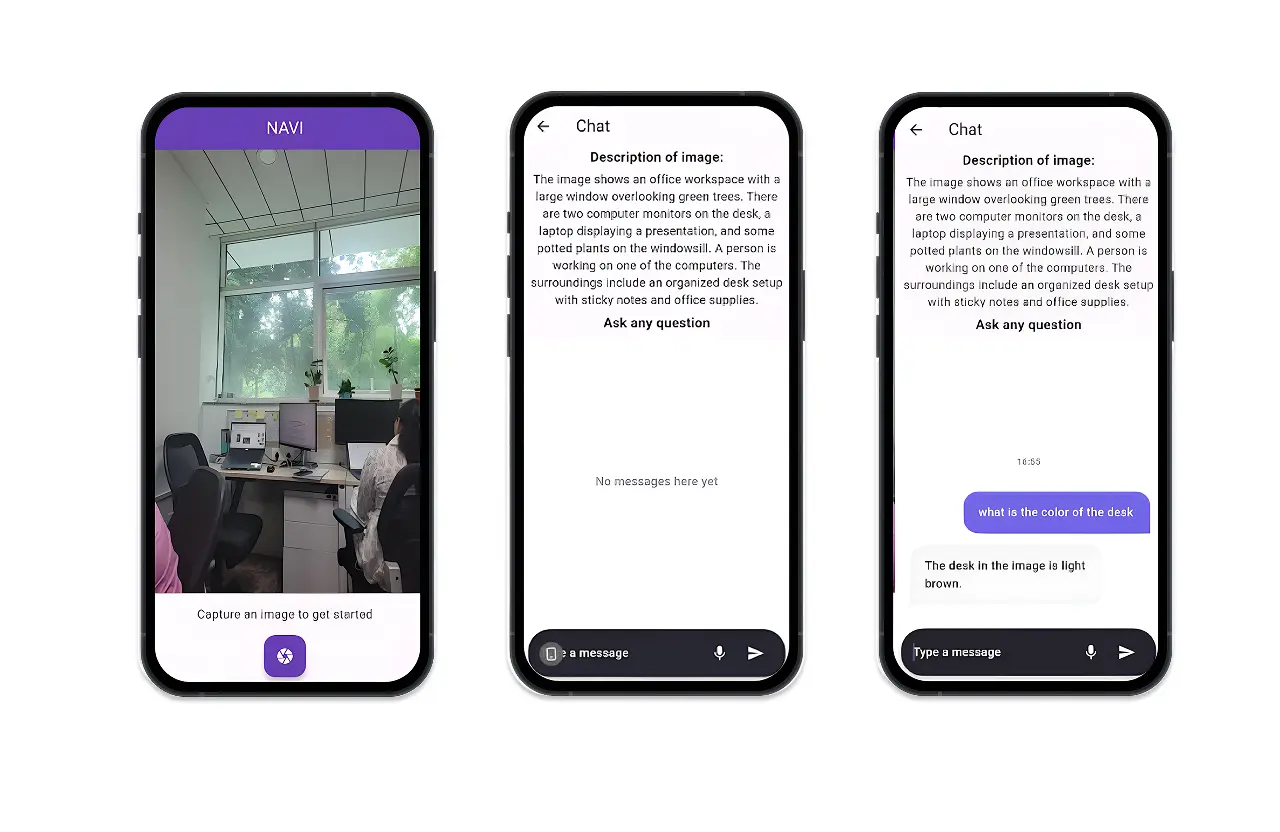

NAVI started as a mobile app with a simple concept: point your camera at your surroundings, and get a description that includes navigation guidance. The app uses your location and the image to provide context-aware directions for indoor spaces. Development took one week using Flutter for the frontend, Node.js for the backend, MongoDB for data storage, and AWS for hosting.

Selecting and Optimizing the AI

I tested three different LLMs: GPT-4o, Gemini, and Llava using 12 test images rated by both blind and sighted users. GPT-4o provided detailed descriptions with specific details about doors and distances. Gemini was conversational but sometimes lacked precision. Llava was fast but missed navigational cues. GPT-4o with detailed prompts scored highest at 7.01/10 and became our foundation. I then fine-tuned prompts and tested on 113 real-world images.

Real-World Testing Adventures

On July 23rd, 2024, we conducted comprehensive field testing at five campus locations: RNI Park, Rajdhani Restaurant, SIT Building, Bharti Building, and SBI Bank. Each location presented different challenges. RNI Park showed the importance of camera angle consistency. Rajdhani Restaurant highlighted the awkwardness of rotating phones to find entrances. SIT Building revealed text recognition limitations, while Bharti Building exposed field-of-view constraints.

The Mobile App Limitations

The real-world testing revealed critical limitations that made mobile phones impractical for consistent navigation assistance. Users held phones at inconsistent angles—sometimes too high, sometimes pointing down at the ground. The limited field of view meant missing side entrances or important signage. At RNI Park, a slight camera angle change caused navigation failure. At Bharti Building, the narrow phone camera couldn't capture the full O-shaped entrance layout. Most importantly, constantly holding and positioning a phone while trying to navigate was cumbersome and defeated the purpose of hands-free assistance.

Evolution to Smart Glasses

I researched existing smart glasses solutions including Envision Glasses, Drishti, and ARX Vision. Most required wired connections and manual mode selection between different functions. For NAVI, I chose the AMB82-mini hardware for its processing power and integrated camera capabilities. The key innovation was implementing a fully wireless system using hybrid BLE/Wi-Fi communication for seamless operation.

Final Results and User Impact

Final testing with 113 real-world images achieved ratings of 8.0/10 from blind users and 7.65/10 from sighted evaluators. The system successfully provided detailed image descriptions and navigation guidance across diverse indoor environments, demonstrating practical applicability for visually impaired users.

Continuation as Final Year Project

After my internship ended, I was so invested in the accessibility space that I decided to continue this work as my final year project. NAVI evolved into Vyaakhya - smart glasses equipped with a context-aware AI agent that provides spoken directional guidance for indoor navigation to visually impaired users.

→ Learn more about Vyaakhya